Build your own SQLite, Part 5: Evaluate questions

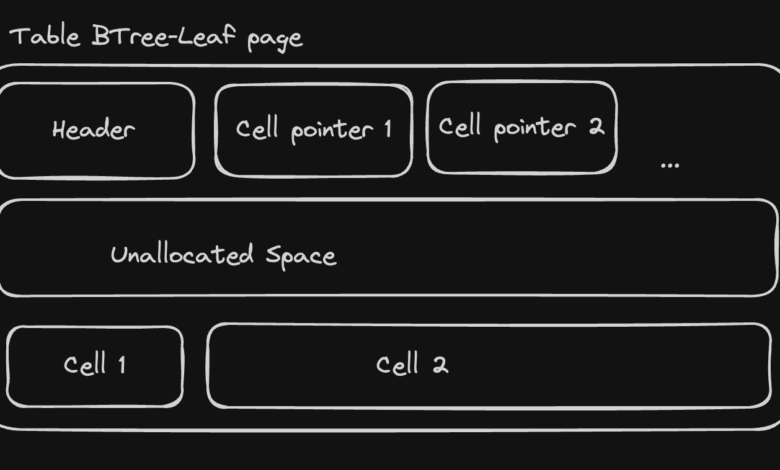

In previous posts, we explored the

SQLITE file format and built a simple SQL Parser. It’s time to put these pieces and execute a query evaluator! In this post, we will place the basis for evaluating SQL questions and build an exercise in the question that can control basic selected statements. While our initial implementation does not support filtering, sorting, grouping, or participating, it will provide us with the foundation to add these parts to future posts.

As usual, the complete source code for this post is available to Kamahir.

Setting up our test database

Before we can identify the questions, we need a database to ask. We will start by creating a simple database with a table, table1with both columns,

id and value:

sqlite3 queries_test.db

sqlite> create table table1(id integer, value text);

sqlite> insert into table1(id, value) values

...> (1, '11'),

...> (2, '12'),

...> (3, '13');

sqlite> .exit

▶ You can be tempted to use a SQLite database to test your questions, but remember that our implementation does not support data from your data file.

This section is specific to the implementation of the bet. If you follow another language, you are safe to skip it!

Now, our pager can only be used by an exclusive mutable reference. It is good for our initial use cases, but at the beginning of the construction of more complex parts, keeping this prohibition will prevent our design. We will make the praggive pager by wrapping in the inner mountains

Arc and Arc. It will be allowed effectively to clone the pager and use it from many places without running out of borrowing issues. At this project stage we can choose to use a simple RcBut eventually we need to support equal access to pager, so we will use counterparts safely in thread.

// src/pager.rs

- #(derive(Debug, Clone))

+ #(derive(Debug))

pub struct Pager anyhow!("failed to lock pager mutex"))?;

input_guard

.seek(SeekFrom::Start(offset as u64))

.context("seek to page start")?;

let mut buffer = vec!(0; self.page_size);

input_guard.read_exact(&mut buffer).context("read page")?;

Ok(Arc::new(parse_page(&buffer, n)?))

the read_page and load_page Methods should be updated:

impl Pager {

pub fn read_page(&self, n: usize) -> anyhow::Result> anyhow!("failed to acquire pager write lock"))?;

if let Some(page) = write_pages.get(&n) anyhow!("failed to lock pager mutex"))?;

input_guard

.seek(SeekFrom::Start(offset as u64))

.context("seek to page start")?;

let mut buffer = vec!(0; self.page_size);

input_guard.read_exact(&mut buffer).context("read page")?;

Ok(Arc::new(parse_page(&buffer, n)?))

let page = self.load_page(n)?;

write_pages.insert(n, page.clone());

Ok(page)

fn load_page(&self, n: usize) -> anyhow::Result> anyhow!("failed to lock pager mutex"))?;

input_guard

.seek(SeekFrom::Start(offset as u64))

.context("seek to page start")?;

let mut buffer = vec!(0; self.page_size);

input_guard.read_exact(&mut buffer).context("read page")?;

Ok(Arc::new(parse_page(&buffer, n)?))

}

Two Things to Notice About read_page Method:

- The initial reading page attempt from the cache is specified in a block to prevent a locked lock measure, make sure it is released before we attempt to claim the completion

- After claiming the writing lock, we will re-check if the page is already in the cache, if it is entered between the two lock candles

Similarly, let’s emphasize an owned version of our Value enum we use in query evaluator:

#(derive(Debug, Clone))

pub enum OwnedValue {

Null,

String(Rc<String>),

Blob(Rc<Vec<u8>>),

Int(i64),

Float(f64),

}

impl<'p> From'p>> for OwnedValue {

fn from(value: Value<'p>) -> Self {

match value {

Value::Null => Self::Null,

Value::Int(i) => Self::Int(i),

Value::Float(f) => Self::Float(f),

Value::Blob(b) => Self::Blob(Rc::new(b.into_owned())),

Value::String(s) => Self::String(Rc::new(s.into_owned())),

}

}

}

impl std::fmt::Display for OwnedValue {

fn fmt(&self, f: &mut std::fmt::Formatter<'_>) -> std::fmt::Result {

match self {

OwnedValue::Null => write!(f, "null"),

OwnedValue::String(s) => s.fmt(f),

OwnedValue::Blob(items) => {

write!(

f,

"{}",

items

.iter()

.filter_map(|&n| char::from_u32(n as u32).filter(char::is_ascii))

.collect::<String>()

)

}

OwnedValue::Int(i) => i.fmt(f),

OwnedValue::Float(x) => x.fmt(f),

}

}

}

Finally, we can improve our Cursor structure of a method returning the value of a field as a OwnedValue:

impl Cursor {

pub fn owned_field(&self, n: usize) -> Option {

self.field(n).map(Into::into)

}

}

EVALUATE SELECT statements

Our query engine consisting of two main ingredients:

- a soorator-like

OperatorEnum represents nestable database operations, such as scanning a table or filtering rows. Our first implementation contains aSeqScanOperator that gives all rows from a table. - art

Plannerstructure that takes a parsed SQL question and produces aOperatorThat can be checked to produce the result of the question.

Let’s start by verifying the Operator Enum:

use anyhow::Context;

use crate::{cursor::Scanner, value::OwnedValue};

#(derive(Debug))

pub enum Operator {

SeqScan(SeqScan),

}

impl Operator {

pub fn next_row(&mut self) -> anyhow::Result<Option<&(OwnedValue)>> {

match self {

Operator::SeqScan(s) => s.next_row(),

}

}

}

The result of assessing a question is obtained by constant calling of

next_row approach to Operator Until it returns None. Each amount of returned cut is equal to a result column of the question.

the SeqScan The structure can be responsible for scanning a table and give its rows:

#(derive(Debug))

pub struct SeqScan {

fields: Vec<usize>,

scanner: Scanner,

row_buffer: Vec,

}

impl SeqScan {

pub fn new(fields: Vec<usize>, scanner: Scanner) -> Self {

let row_buffer = vec!(OwnedValue::Null; fields.len());

Self {

fields,

scanner,

row_buffer,

}

}

fn next_row(&mut self) -> anyhow::Result<Option<&(OwnedValue)>> {

let Some(record) = self.scanner.next_record()? else {

return Ok(None);

};

for (i, &n) in self.fields.iter().enumerate() {

self.row_buffer(i) = record.owned_field(n).context("missing record field")?;

}

Ok(Some(&self.row_buffer))

}

}

the SeqScan The structure is initiated by a list of field indices to read from each record and a Scanner That can provide records for each row of the scan table. As the number of fields to read is the same for each row, we can distribute a buffer to store values in the selected field. The consecutive way has earned the next record from the scanner, removing the requested fields (specified by their indexes), and it stores with our buffer.

Now that we have a Operator to evaluate SELECT statements, we will continue to Planner structure to produce the Operator From a parsed SQL Query:

use anyhow::{bail, Context, Ok};

use crate::{

db::Db,

sql::ast::{self, SelectFrom},

};

use super::operator::{Operator, SeqScan};

pub struct Planner<'d> {

db: &'d Db,

}

impl<'d> Planner<'d> {

pub fn new(db: &'d Db) -> Self {

Self { db }

}

pub fn compile(self, statement: &ast::Statement) -> anyhow::Result {

match statement {

ast::Statement::Select(s) => self.compile_select(s),

stmt => bail!("unsupported statement: {stmt:?}"),

}

}

}

the Planner The structure is initiated by a database reference and gives a compile Way a parsed SQL statement is necessary and return the counterpart Operator. the compile Procedures sent in a particular method for each type of SQL statement.

See how to build a Operator for a SELECT Statement:

impl<'d> Planner<'d> {

fn compile_select(self, select: &ast::SelectStatement) -> anyhow::Result {

let SelectFrom::Table(table_name) = &select.core.from;

let table = self

.db

.tables_metadata

.iter()

.find(|m| &m.name == table_name)

.with_context(|| format!("invalid table name: {table_name}"))?;

let mut columns = Vec::new();

for res_col in &select.core.result_columns {

match res_col {

ast::ResultColumn::Star => {

for i in 0..table.columns.len() {

columns.push(i);

}

}

ast::ResultColumn::Expr(e) => {

let ast::Expr::Column(col) = &e.expr;

let (index, _) = table

.columns

.iter()

.enumerate()

.find(|(_, c)| c.name == col.name)

.with_context(|| format!("invalid column name: {}", col.name))?;

columns.push(index);

}

}

}

Ok(Operator::SeqScan(SeqScan::new(

columns,

self.db.scanner(table.first_page),

)))

}

}

First, we find a table entering metadata equal to the name of the table of SELECT

Statement. Then we refer to the consequences of the statement and build a list of Indines in the book field from each record, even in expansion * In all columns or by looking at the Column Name of the table metadata.

Finally, we make a SeqScan Operator to scan across the tablet and will provide selected fields for each row.

The review of the repl question

It’s time to put our query evaluator in the test! We will make a simple task reading a raw question of SQL and checks them:

fn eval_query(db: &db::Db, query: &str) -> anyhow::Result<()> {

let parsed_query = sql::parse_statement(query, false)?;

let mut op = engine::plan::Planner::new(db).compile(&parsed_query)?;

while let Some(values) = op.next_row()? {

let formated = values

.iter()

.map(ToString::to_string)

.collect::<Vec<_>>()

.join("|");

println!("{formated}");

}

Ok(())

}

This Function creates a pipeline: It targets the SQL question, establish a

Operator With our planner, and then repeatedly calls the next-run () of the resulting operator to get and display each result array.

The final step is to use this function in the replo loop:

// src/main.rs

// (...)

fn cli(mut db: db::Db) -> anyhow::Result<()> {

print_flushed("rqlite> ")?;

let mut line_buffer = String::new();

while stdin().lock().read_line(&mut line_buffer).is_ok() {

match line_buffer.trim() {

".exit" => break,

".tables" => display_tables(&mut db)?,

+ stmt => eval_query(&db, stmt)?,

- stmt => match sql::parse_statement(stmt, true) {

- Ok(stmt) => {

- println!("{:?}", stmt);

- }

- Err(e) => {

- println!("Error: {}", e);

- }

- },

}

print_flushed("\nrqlite> ")?;

line_buffer.clear();

}

Ok(())

}

Now we can run the repl and evaluate some simple SELECT Statements:

cargo run -- queries_test.db

rqlite> select * from table1;

If all is well, you need to see the following output:

1|11

2|12

3|13

Finally

Our little machine in the database begins to make form! We can now parse and evaluate simple SELECT questions. But there are more covers before we call it a perfect functional database engine. In the next posts, we’ll know how to filter rows, read indexes, and implement sorting and grouping.

https://hashnode.com/utility/r?url=https%3A%2F%2Fcdn.hashnode.com%2Fres%2Fhashnode%2Fimage%2Fupload%2Fv1740003915763%2F0910f43a-d740-4e2a-bb52-826c87412cd5.png%3Fw%3D1200%26auto%3Dcompress%2Cformat%26format%3Dwebp%26fm%3Dpng

2025-02-20 01:32:00