DeepSeek and the Effects of GPU Export Controls

Last week, DeepSeek unveiled their V3 model, which was trained on 2,048 H800 GPUs – a fraction of the hardware used by OpenAI or Meta. DeepSeek claims that their model matches or exceeds many benchmarks set by GPT-4 and Claude

What’s interesting is not just the results, but how they got there.

The Numbers Game

Let’s look at the raw numbers:

- Training cost: $5.5M (vs $40M for GPT-4)

- GPU count: 2,048 H800s (vs estimated 20,000+ H100s for large labs)

- Parameters: 671B

- Training: 2.788M GPU hours

Recent research shows model training costs growing 2.4x per year since 2016. Everyone believes you need more GPU clusters to compete at the frontier. DeepSeek suggests otherwise.

Export Controls: Task Failed?

The US has banned China’s high-end GPU exports to slow its AI development. DeepSeek should work on H800s – disabled versions of the H100 with half the bandwidth. But this restraint may have accidentally spurred innovation.

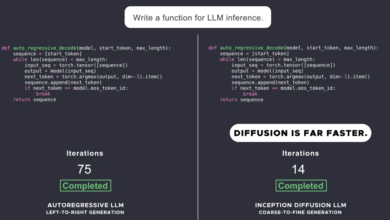

Instead of throwing computation at the problem, Deepseek focuses on architectural efficiency:

- FP8 accuracy training

- Algorithmic infrastructure optimization

- New training frameworks

They can’t access unlimited hardware, so they make their hardware smarter. They seem forced to solve another, possibly more valuable problem.

The High-Flyer Factor

Context is important though. DeepSeek is no ordinary startup – they are backed by High-Flyer, an $8B funding round. Their CEO Liang Wenfeng built High-Flyer from scratch and seems to be focused on fundamental research on quick profits:

“If the goal is to create applications, using the Llama structure for quick product deployment makes sense. But our destination is AGI, which means we need to learn new structures of the model to realize stronger model capabilities with limited resources.”

Beyond the Hype

We must be careful about over-interpreting these results. Yes, DeepSeek achieves impressive efficiency. No, this does not mean that export controls have “backfired” or that they have broken some magic formula.

What this shows is that the road to better AI isn’t just about throwing more GPUs at the problem. There is still huge room for fundamental improvements in how we train these models.

For developers, this is actually exciting news. This suggests that you don’t need a hyperscaler budget to do meaningful work on the frontier. True innovations can come from the resource-constrained, not the resource-rich.

The Road Ahead

The DeepSeek paper mentions that they are working on “breaking the architectural limitations of transformers.” Given their track record with efficiency improvements, it’s worth a look.

https://api.mightyshare.io/v1/MSeTLvoDQQXTffir/a9bfed6ce8824918dd41aebd8932c4a13b14a0dff6ff0c40ce756bc3a3f55341/jpeg?cache=true&height=630&width=1200&template=standard-1&template_values=%5B%7B%22name%22%3A%22google_font%22%2C%22google_font%22%3A%22%22%7D%2C%7B%22name%22%3A%22logo_width%22%2C%22text%22%3A%22%22%7D%2C%7B%22name%22%3A%22primary_color%22%2C%22color%22%3A%22%23222a40%22%7D%2C%7B%22name%22%3A%22logo%22%2C%22image_url%22%3A%22https%253A%252F%252Fwww.vincentschmalbach.com%252Fwp-content%252Fuploads%252F2021%252F05%252FVincent-Schmalbach-draft01.jpg%22%7D%2C%7B%22name%22%3A%22background%22%2C%22image_url%22%3A%22https%253A%252F%252Fwww.vincentschmalbach.com%252Fwp-content%252Fuploads%252F2024%252F11%252Ftechnologybackground-scaled.jpg%22%7D%2C%7B%22name%22%3A%22title%22%2C%22text%22%3A%22DeepSeek%2520and%2520the%2520Effects%2520of%2520GPU%2520Export%2520Controls%22%7D%2C%7B%22name%22%3A%22description%22%2C%22text%22%3A%22%22%7D%5D&page=https%3A%2F%2Fwww.vincentschmalbach.com%2Fdeepseek-and-the-effects-of-gpu-export-controls

2025-01-23 15:53:00