Diffusion Models – Nikhil R

Diffusion models are interesting

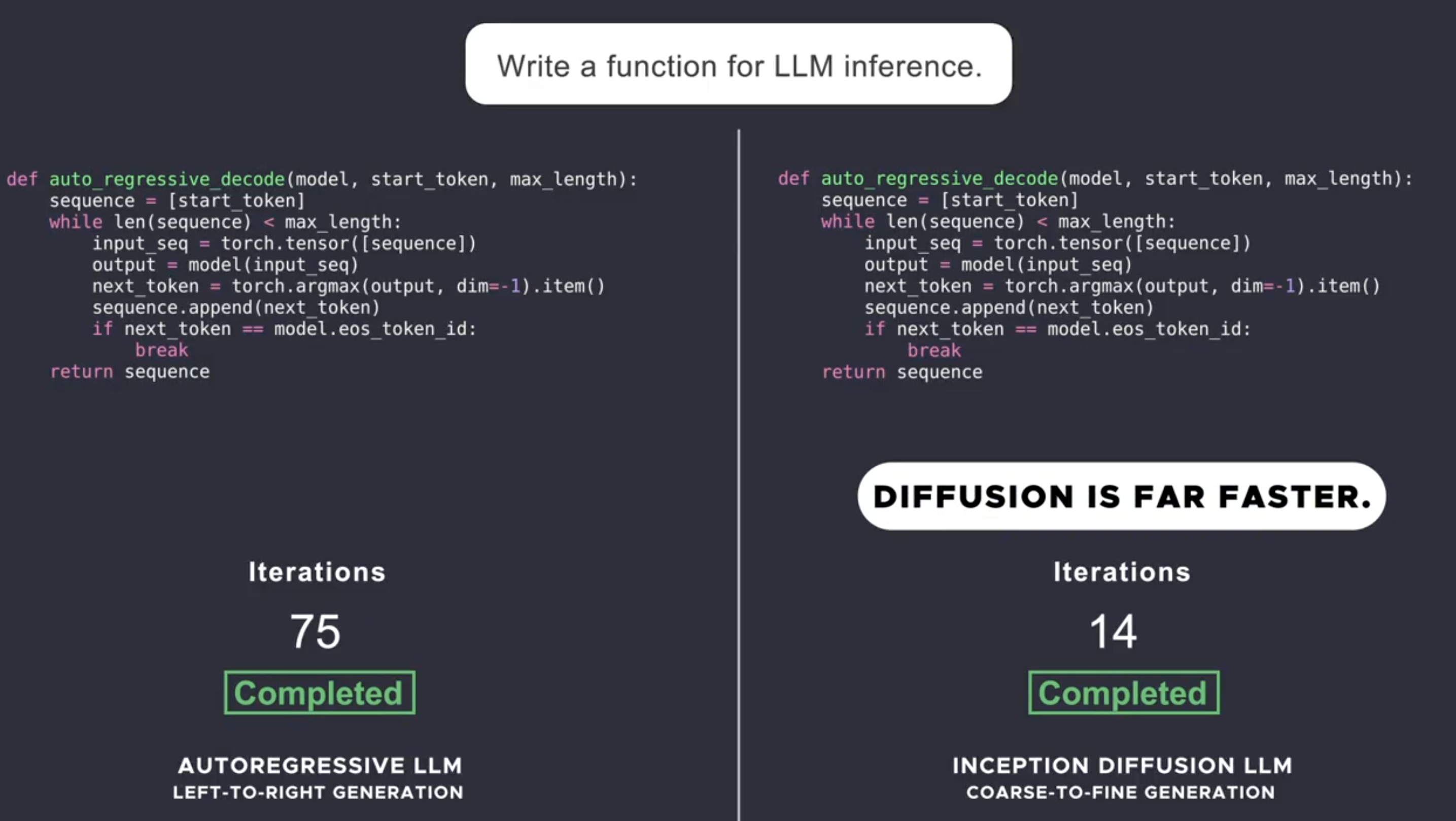

I was imprisoned IT Tweet a week or so is that this company called start labs releases a diffusion llm (Dllm). Instead of autoregressive and predict tokens left on the right, here you start at a time and then slowly with words with good words together (start / middle / midline at once (start / middle / midline at one time (start / middle / midline at once (start / middle / midline at once (start / middle / midline at once (start / middle / mid-one time). Something that works in history for image and video models currently outcing a similar size LLMS in the generation of code.

- The company also claims 5-10x speed improvements and recovery

Why are they interesting to me?

After spending the best part of the last 2 years of reading, writing, and work to evaluate LLM, I see some first-hand benefits:

Traditional LLMS Hallucinate. It’s like they’re confident that spinballing text while actually making the facts of going. This is why they started the sentences more confident sometimes just suggesting something that is overlaid at the end. Dllives can make some important features first, prove them, and then keep the generation remaining.

- Ex: A CX Chatbot first generates the policy version number, validate it before counseling a customer about a potential policy policy.

Agents become better. A large step agent agent may not hit the loops using DLLMS. Planning, reason, and self-correcting is an important part of the agent who flows, and we can now Bottlenedeeded due to the architecture of LLM. DLLIVs can solve for it by making sure the entire surface plan below remain united. It looks like looking forward to the future a bit (based on any context you have) and then make sure you don’t hit.

Here is to see a more recent model Answering quickly “explain the theory of the game” to me. You can notice the last part of the sentences made before the middle. It is very fun to run some questions and see which words are first made.

You can try it yourself here Japanese.

2025-03-07 01:35:00