If GPUs Are So Good, Why Do We Still Use CPUs?

There’s this old video from 2009 that went viral on Twitter recently. This should give viewers an intuition of the difference between CPUs and GPUs.

You can watch it here, it’s 90 seconds long:

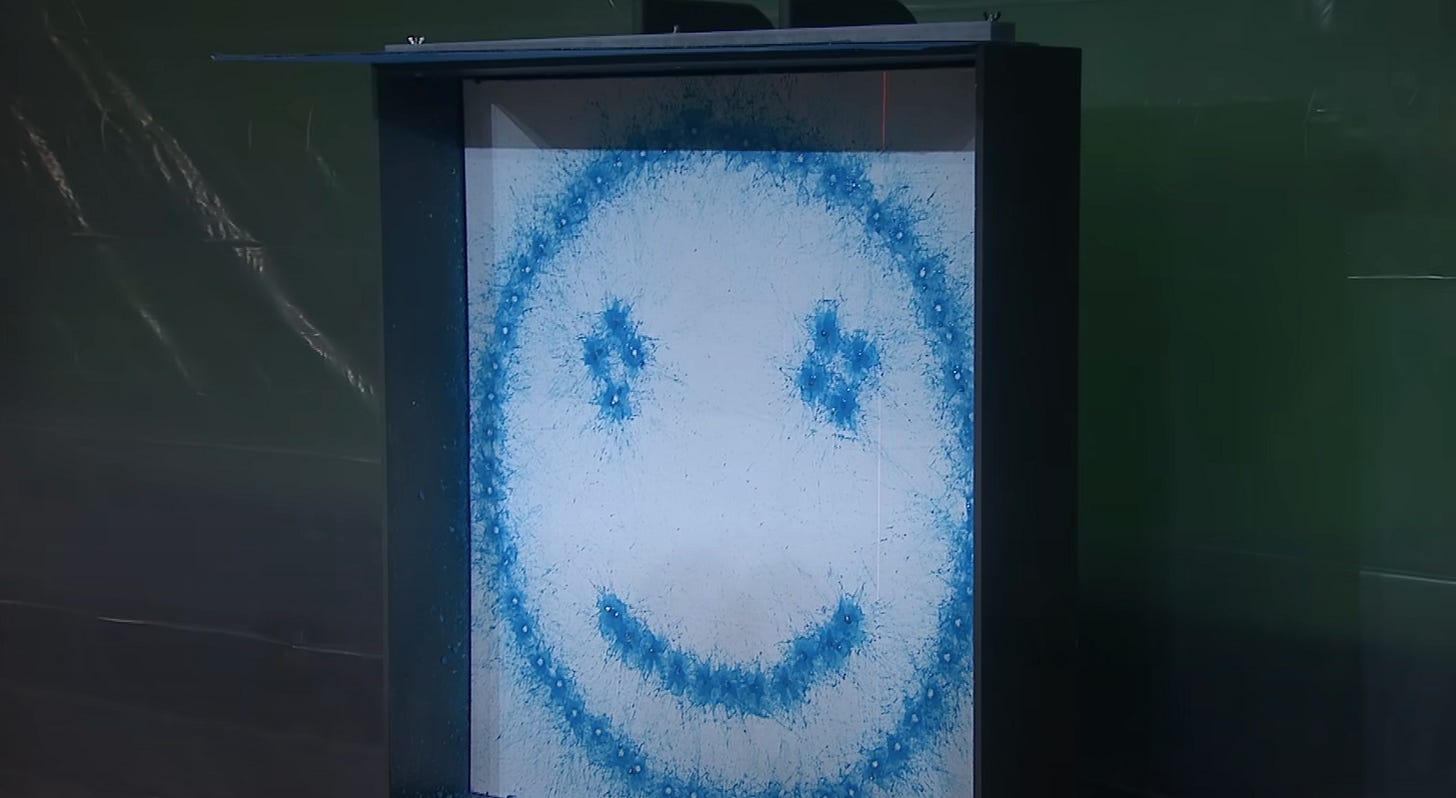

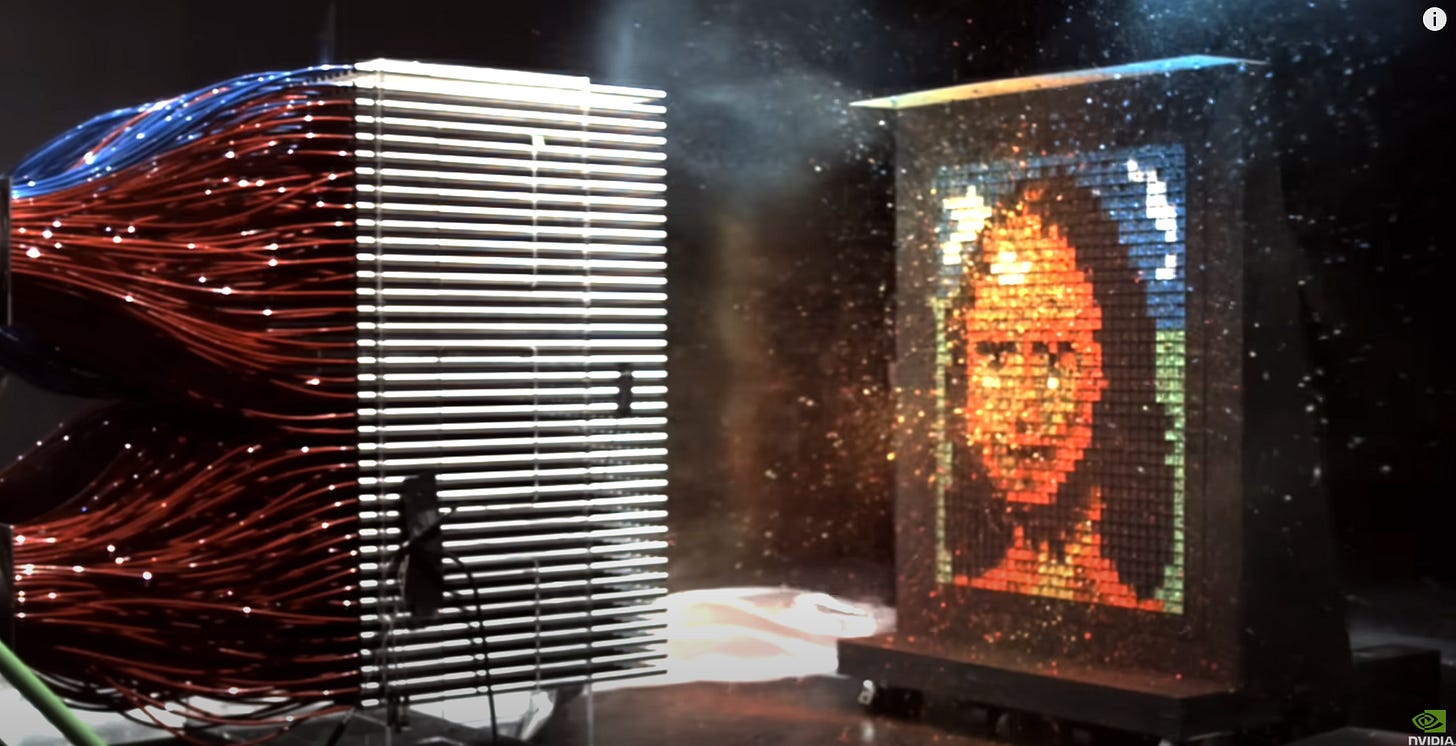

The idea is that the CPU and a GPU go head-to-head in a painting duel. The processors are somehow linked to a machine that shoots paintballs.

The CPU takes a full 30 seconds to paint a basic smiley face:

And then the GPU paints the Mona Lisa in an instant:

One takeaway from this video: CPUs are slow and GPUs are fast. Even if this is true, there’s a lot more nuance that the video doesn’t provide.

When we say that GPUs are better than CPUs, we are talking about a measurement called TFLOPS. For example, the Nvidia A100 GPU is capable of 9.7 TFLOPS while the new Intel 24-core processor can do 0.33 TFLOPS. That means the average GPU is at least 30x faster than even the most capable CPU.

But the chip in my MacBook (Apple M3 chip) has one CPU and one GPU. Why? Can’t we just get rid of these slow CPUs?

Let’s define two types of programs: series of programs and parallel programs.

Successive programs are programs where all instructions must follow one another. Here is an example.

import random

def random_multiply():

n = 1

for _ in range(100):

n *= random.randint(1, 10)

return nFor 100 steps, we multiply n by random number. The important quality of this program is that each step depends on the previous step. If you are doing this calculation by hand, you cannot tell your friend, “You calculate steps 1 through 50 while I calculate steps 51 through 100.” You must do all the steps in order.

Parallel programs are programs in which multiple instructions can be executed simultaneously because they do not depend on each other’s results. Here is an example:

def parallel_multiply():

numbers = (1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

results = ()

for n in numbers:

results.append(n * 2)

return resultsIn this case, we do ten multiplications that are completely independent of each other. The important thing is that order is not important. If you want to divide the work with a friend, you can say, “You multiply the odd numbers while I multiply the even numbers.” You can work separately and simultaneously and get accurate results.

In fact, this is a false dichotomy. Most large real-world applications contain a mix of sequential and parallel code. In fact, every program has a percentage that is parallelizeable.

For example, let’s say we have a program than runs 20 calculations. The first 10 are interdependent, but the latter 10 calculations can be performed in parallel. We say that this program is “50% parallelizeable” because half of the instructions can be independent of each other, which means that they can run at the same time. To illustrate this, we can combine our two previous example snippets into a 50% parallelizable piece of code:

def half_parallelizeable():

# Part 1: Each step depends on the last

sequential_list = (1)

for _ in range(9):

next_value = sequential_list(-1) * random.randint(1, 10)

sequential_list.append(next_value)

# Part 2: Each step is independent of each other

parallel_results = ()

for n in sequential_list:

parallel_results.append(n * 2)

return sequential_list, parallel_resultsThe first half must be consecutive – every number of sequential_list depending on the value that precedes it. The second half takes the completed list and performs independent operations on each value

You can’t calculate any number in the first list until you have the previous one, but once you have the whole list, you can distribute the multiplication operations over as many workers as you can use.

In general, CPUs are better for series of programs and GPUs are better for parallel programs. This is due to a fundamental difference in the design of CPUs and GPUs.

CPUs have a few large cores (Apple’s M3 has an 8-core CPU), and GPUs have many small cores (Nvidia’s H100 GPU has thousands of cores).

This is why GPUs are so good at running highly parallel programs – they have thousands of simple cores that can perform the same operation on different pieces of data simultaneously.

Rendering video game graphics is an example where many simple repeated calculations are required. Think of your video game screen as a giant matrix of pixels. If you suddenly turn your character to the right, all the pixels must be recalculated with the new color values. Fortunately, the calculation for the pixels at the top of the screen is independent from the pixels at the bottom of the screen. So the calculations can be divided among many thousands of cores of GPUs. This is why GPUs are so important for gaming.

CPUs are significantly slower than GPUs on very similar tasks such as multiplying a matrix of 10,000 independent numbers. However, they excel at sophisticated sequential processing and complex decision making.

Think of a CPU core as a chef in a busy restaurant kitchen. This chef can:

-

Quickly adapt their cooking plan when a VIP guest arrives with special dietary requirements

-

Seamlessly switch between preparing a delicate sauce and checking the roast vegetables

-

Manage unexpected situations such as power outages by reorganizing the entire kitchen workflow

-

Orchestrate multiple dishes so they all arrive hot and fresh at the exact time

-

Maintain food quality while juggling multiple orders in various states of completion

In contrast, GPU cores are like a hundred line cooks that are good at repetitive tasks – they can cut vegetables in a flash, but they can’t efficiently run the entire kitchen. If you ask a GPU to handle the constant changes that a dinner service demands, it will struggle to adapt.

This is why CPUs are essential for running your operating system. Modern computers face a constant stream of unpredictable events: apps start and stop, network connections drop, files are accessed, and users click. randomly on the screen. The CPU excels at juggling all these tasks while keeping the system responsive. It can quickly switch from helping Chrome render a webpage to processing a Zoom video call to handling a new USB device connection — all while keeping track of system resources and securing that each application gets its fair share of attention.

So why GPUs excel in parallel processing, CPUs are still essential for their unique ability to handle complex logic and adapt to changing conditions. Modern chips like Apple’s M3 have both: combining the flexibility of the CPU with the computing power of the GPU. In fact, a more accurate version of video painting would show the CPU handling the image download and memory allocation, before sending the GPU to rapidly render the pixels.

https://substackcdn.com/image/fetch/w_1200,h_600,c_fill,f_jpg,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fe933aca3-5b54-4a78-8063-d6dc5b387fae_2468x1264.png

2025-01-08 04:59:00