Why Ai is more dumb and not scary at all (pt.1)

Tl: Dr – AI never thought, it was like this. Technological changes occurred before and people adjust as needed.

Edit 3/8/2025: At first I wrote it a few years ago in March 2023, there were a lot of changes in the field since, but weird (or more bad points still standing.

Wherever I go I will keep listening to “Oh no, get my job” “Oh no, can Skynet come?” “Oh no, AI will steal my husband / wife”.

And in some sense it is true. It has the potential to turn up throughout industries and disrupt the next few decades of technology (and maybe your relationship also idk 🤷🏾♂️)

Every other day you see news about AI. Midjourney, Dalle, strong spray, Flux, Sora (the list continues) with images and videos that look very beautiful. Every Other LinkedIn Post says “

Hell, a Cantbot company, replica, Even used users with accompanied Ai they can talk to dirty (But then I also have feelings that people who use it seriously not exactly ethical …)

These are crazy times we live and are hard not to fear all this AI that looks like magic in this brave new world.

Any adequate advanced technology is not known from magic

-Shur c clark

But once you’re AWARE How a magic trick works, it RETIRE to be magic.

In its heart, all AI is just a pattern recognition machine. They simply algorithms designed to maximize or minimize an objective action. There is no real thought involved. It’s just math. If you think of an area of all probability theory and linear algebra, there is a soul, I think you need to explain the identification. (Author’s note: There are arguments how things are like bananas, rocks, and many enough integers knowing but we’ll keep that for another post)

Formal, what these models do is to learn the possibility of data distribution. Suffering, these models study standards based on past data and then make predictions. And yes, this will happen in that text and images also have distributions as well as images that an AI can also be identified and then predict (Now how about how to get these models from simple statements such as Chatgpt or Dalle is still a post (s)). Many language models (LLMS), for the most part, the next word prediction and I think it is reasonable to say that it gives us many outcomes.

Learning standards from texts and images across the height of human civilization, which in and itself is a bad success but not Granted thinks involved. That’s an algorithm trying to maximize its purpose. The test is best to imitate the data found in the past. It is very good to take patterns that exist but actually without distributions, difficult thoughts if not impossible at the moment. And even if it’s as original I’d rather believe it a dagger with some training data at some point, in one place.

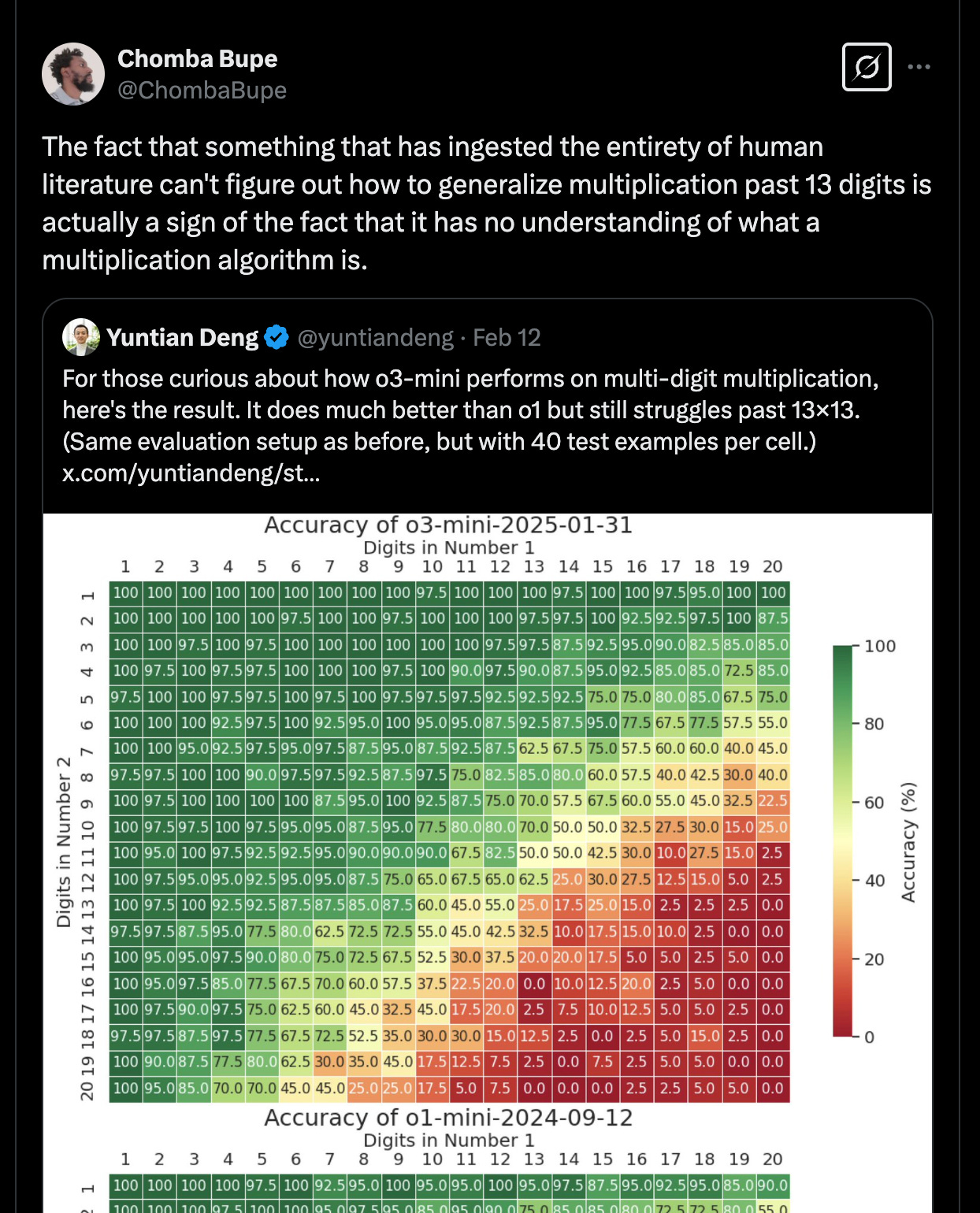

What is the purpose of the end for adding the complexity of these models? Every few months or so we can get a new model as much better. Make more complex patterns agree to machines with large data but fail to be actual logic by maneuvering the logic (see some interesting work of Leon Botou: From Machine Learning To Machin Macin MOUCTE) and more importantly seems to be lacking understanding. Even some of the main models still cannot multiply:

Sure, with millions of millions of training examples, of course you can follow intelligence. If you already know what is in the test, usually patterns for test answers, or even the answer to the key itself, then you are really intelligent? Or do you register information from billions of past trials? I think the sentiments here reflect John Searle with him The argument in the Chinese room.

Let’s forget that learning machine and AI models are not always right. We all see examples of LLMS “hallucinating”, saying bad about. In fact, it will be suspect if it may mean that test data to drop training data, ie AI gets a “peek” in the answer key. Image generation is good but far away. There are no doubt some strange results but the proper way, even the layman can speak something missing. There is still some importance without concern for most of the most images made by artificially made.

This video by Dmitri Blanco (a tua financial guy I followed on YouTube) makes a good job of intellectual hiding the main points and criticism of the entire AI cycle

Unless something fundamental changes in ways these models develop, I don’t see it a real threat to most jobs.

Let’s look at the history of past technology changes. The mechanical loom is one of the key advances in the beginning of the industry revolution and its luddies negative. The painters negative in the picture. The odd, an anime repaser channel has very good collapse at these points:

Analog is there. Mechanical flow to simple work as most AI automates work or automate work. Have previously marital clocks as well. People who go to houses and knock on your window to wake you time for work. Should we mourn the loss of that field of work? We use dishes and clothes manually and it also has automatic. The list is ongoing.

Remove the software field for example. Today, we have AI items such as Windsurf, Cursor, Copilot, and others who can write in the boilerplate code in seconds. Will it only automate the boring boilerplate code and menial items and that is not what we want in the first place? No need to (metaphorically) wash the dishes and clothes?

Sigurado, ang mga modelo sa anthropic makahimo sa usa ka tinuod nga cool nga todo app o panapton nga simulator, apan dili kana ang tinuud nga mga sistema sa software, nga gisiguro nga kini usa ka butang nga kinahanglan nga gitukod sa una nga lugar, nga kini usa ka butang nga kinahanglan nga gitukod sa una nga lugar, sa daghang mga butang nga gitukod sa una nga mga butang. AI seeks to forget the bigger picture of these scenarios: knowing the right questions to ask, the right problems can be resolved. They cannot replace software engineers anytime in my opinion. Also think of paraox jevons. Although AI gets good to fully replace jobs, This can increase the need for jobs

Industry experience brings me to believe that there is a basic lack of confidence in black box systems and never completely loses people in the hole. Having AI instead of people in a specific role differ from the point of full paper. Sure you can write a letter of apology for a tragedy or some sensitive subject matter using AI (see Vanderbilt), but should you? Are you sure you can be AI in charge of customer support, but a customer wants to like that?

Hot pickup: If your job is part or completely eliminated by AI, that’s a good thing. If your work has patterns that Polite or labor that Rutina, AI Automation is a Good thing. Change is inevitable. You can’t stop rail like they say. It will kill some jobs but not all. Overall, I am sure to give birth to all new systems that we have never conceived yet and, one will hope, with many meaningful or strong pursuits.

https://substackcdn.com/image/fetch/w_1200,h_600,c_fill,f_jpg,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fad719f89-7597-465d-bfd7-fd25bf5af7ff_583x467.jpeg

2025-03-09 00:25:00